Why SWS mobile survey may be statistically flawed

By Dean Jorge Bocobo, first published at his facebook page.

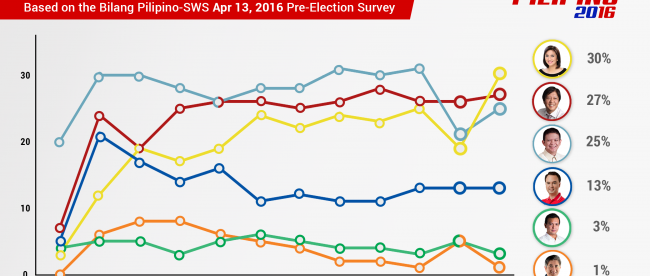

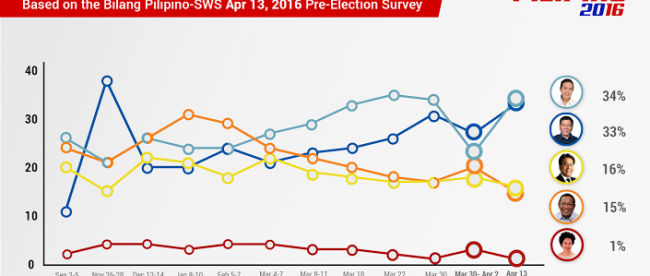

((MANILA – In the Bilang Pilipino April 13 and 14 SWS Mobile Survey Report exclusive to TV5 NETWORK, the presidential aspirants obtained the following scores: Poe 34%, Duterte 33%, Roxas 16%, Binay 15%, and Santiago 1%. For the vice presidential race, it’ s Robredo 30%, Marcos 27%, Escudero 25%, Cayetano 13%, Trillanes 3% and Honasan 1%.))

However before you start rejoicing OR gnashing your teeth, (depending on who you are rooting for) please be aware that this “Mobile Survey” is very different from the normal SWS pre-election surveys in several very significant ways:

1. Although it uses 1200 respondents who were presumably selected randomlty from validated voters lists, the survey re-uses the same sample of voters for each of the subsequent runnings of the survey, making the sample more like a “focus group or panel”. I do believe the normal surveys choose a different randomly selected sample for each repeat poll. This only means that the identity of the respondents is more easily susceptible to “leaks” and therefore less secure against gaming or undue influence.

2. In the April running of this Mobile Survey, only 56% (676 out of 1200) of the original panel actually responded to the survey. But Mass Media is blithely considering this to be just a smaller sample and naively assigns a statistical uncertainty of plus or minus 4% to the results. But I think this is wrong because WHY did 44% of the panel NOT respond to the survey? It suggests that there is a kind of SELF-SELECTION going on here, which happens in many unscientific polls. This may not entirely invalidate the results, but the margin of error is almost certainly NOT as if the actual respondents were randomly selected.

Mobile surveys are definitely the future of polling because they offer the possibility of much larger respondent samples with quicker turn around times than face-to-face surveys. However, this is the first time SWS is doing this, so this survey model is untested and unproven. It will require a careful comparison of the eventual official winners with the survey’s last snapshot of the race.

So for those rooting for Roxas or Binay, do not despair (even if they are shown to be in the teens, lagging well behind Poe and Duterte). Likewise, Robredo, Bongbong and Chiz fans should hang onto the edge of their seats.

There is another important factor, recently brought to my attention by political consultant Malou Tiquia, on the matter of “market” and “command votes”. This has to do with the fact that while the scientific polls may quite accurately gauge public preferences to within the precision allowed by their sample sizes, there is reason to think that such surveys do not take into account the distortion caused by command voting in some communities where local powers are able to dictate or strongly influence the popular votes. For example, in the past, we have seen strange results not reflected in pre-election polling, where whole precincts or towns turn in 100% of votes for one candidate.

Both Roxas and Binay are said to have considerable command votes through allies and subalterns in the local areas.